About Ghaf

Ghaf Framework is an open-source project that provides information about our work and studies in the security technologies field in the context of embedded virtualization.

The applied software research supports Secure Systems Research Center (SSRC) focus areas.

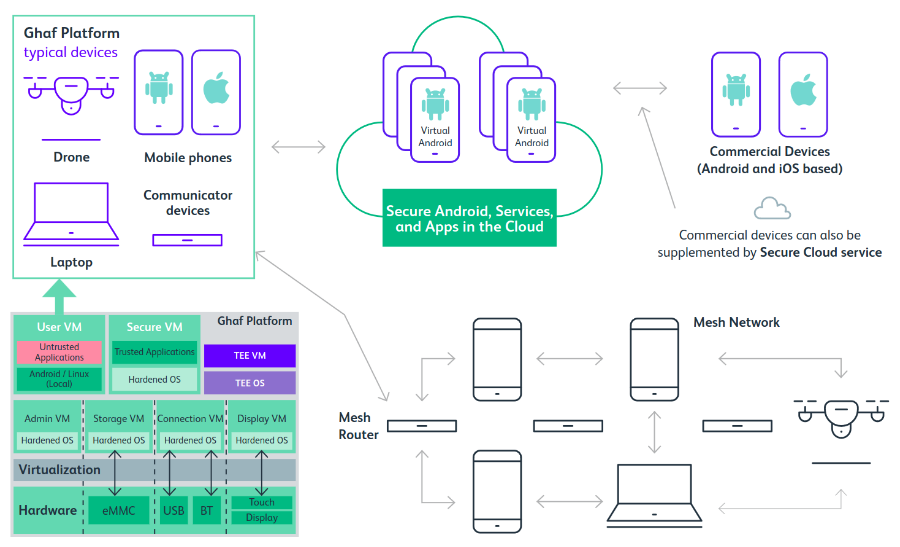

Ghaf Framework can be used to build the Ghaf Platform that will provide an edge device software architecture with key features such as modularity and scalability through virtualization, support research and development of zero trust architecture (ZTA), and allow for low maintenance efforts while keeping the main code base stable and secure. The SSRC team focus is to research on enhancing ZTA to scale horizontally across edge HW platforms (phones, drones, laptops, communication modules) and vertically across SW platforms (Linux, Android, Browser, applications).

The Ghaf Platform is a baseline software platform for edge devices, utilizing a virtualized architecture for research and product development aiming to achieve the following core objectives: apply the general security principles of zero trust within the software architecture, and act as an enabler for ZTAs within organizations.

Embedded Virtualization

Virtualization is one of the core enablers to transform the traditionally monolithic software stack within edge devices into isolated components with minimal TCB and clearly defined functionality.

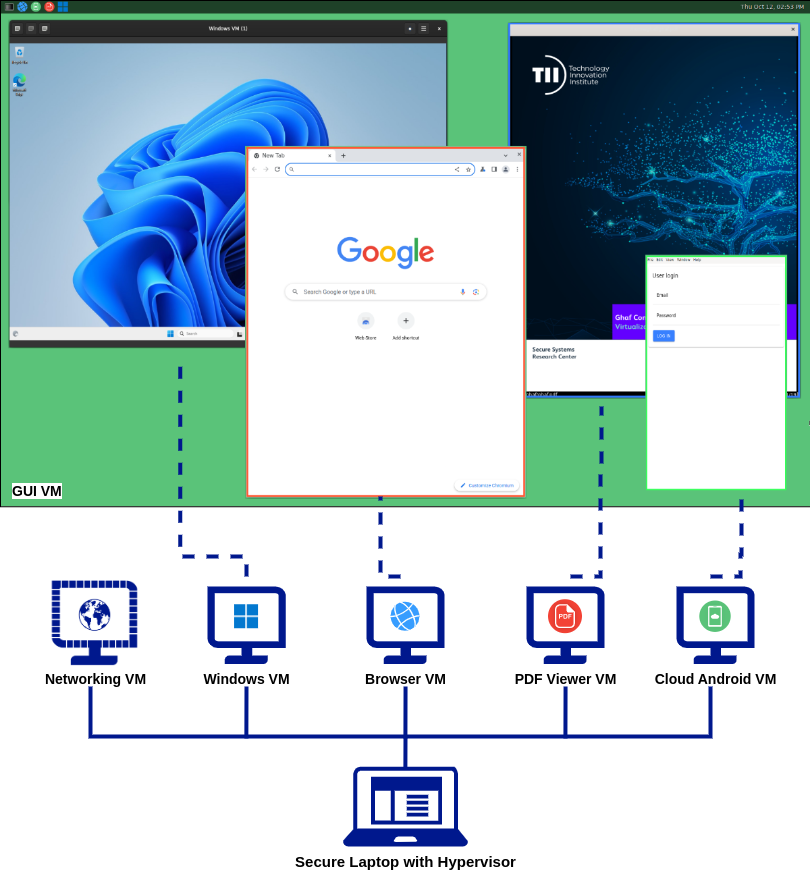

The Ghaf Platform utilizes a collection of virtual machines (VMs) to define a system.

Contrary to the traditional monolithic OS, this concept allows to define and run host services in isolated environments, which breaks up the monolithic structure and allows for a modular system definition that is customizable for a specific use case. To this end, various applications and guest OSs can be deployed while simultaneously utilizing the Platform's features.

Ghaf Platform Applications

The Ghaf Platform development is focused on the transition to a modular architecture for edge devices. Products such as secure phones, drones, laptops, and other communication devices have unique challenges in their respective hardware and software ecosystems.

Enabling the integration of individual technology stacks into an organizational framework can be a challenging task. The Ghaf Platform is designed to ease this process and enable research to overcome a number of challenges.

Design Principles

The design principles influencing the architecture of the Ghaf Platform are the following:

-

Edge security

The Ghaf security architecture under development by SSRC aims to provide an understandable yet comprehensive view of security controls in the Platform so that vendors can make informed decisions and adopt the Platform for their use cases. The security architecture and subsequent research will be published by SSRC in a series of technical white papers.

-

Zero trust

The Ghaf Platform aims to apply the general security principles of zero trust within the software architecture and to act as an enabler for ZTA for edge devices within organizations.

-

Trusted computing base

The general principle for establishing the trusted Ghaf Platform code base is to rely on audited software and proven security modules while carefully evaluating and integrating new concepts. The modularized platform not only simplifies the integration of additional security measures but also facilitates the integration of hardware security features. Leveraging and contributing to open-source projects is not only a cornerstone for the Platform components' maintainability but also for the toolchain to increase transparency and auditability. By providing a hardened code base for the hypervisor and OS for the various VMs in the architecture, the Ghaf Platform leverages security benefits across all modules.

-

Configurable, declarative and reproducible

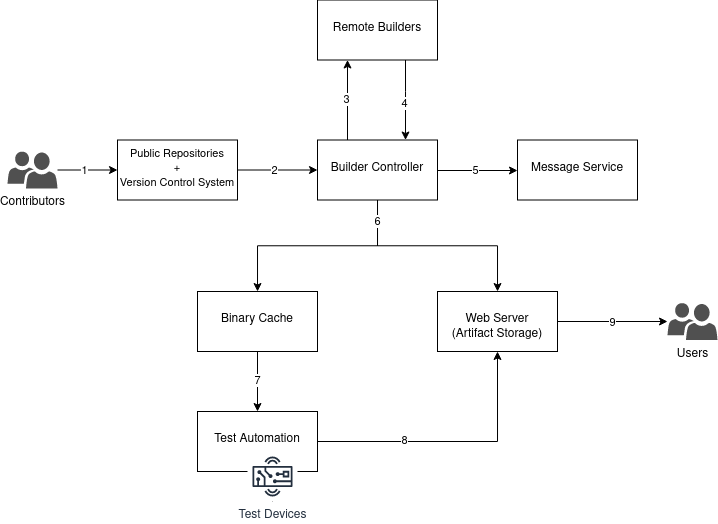

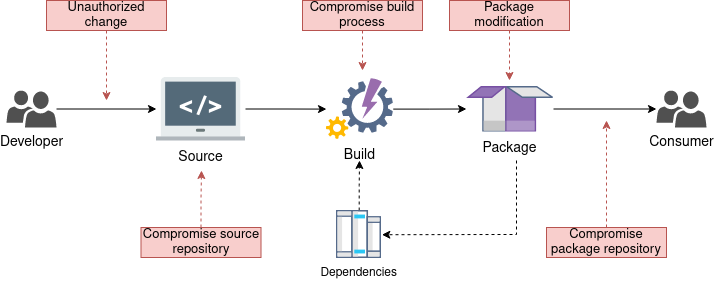

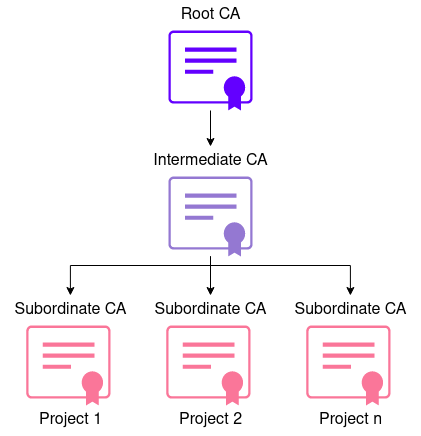

Build System and Supply Chain

As software supply chain security becomes more and more relevant to product security, it is necessary to provide mechanisms to assert reproducible builds, with a transparent chain from source code over the build environment to the final binaries. Such a system allows faster analysis of not only software bugs but also security vulnerabilities and their impact on a product without the need for extensive analysis. This approach further reduces the efforts required for patching and allows mechanisms for safe fallbacks to secure states.

For more information on Ghaf supply chain security, see Supply Chain Security.

Features

The vision for the Ghaf platform is to create a virtualized, scalable reference platform that enables the building of secure products leveraging trusted, reusable, and portable software for edge devices. For more information on reference implementation for several devices, see Reference Implementations.

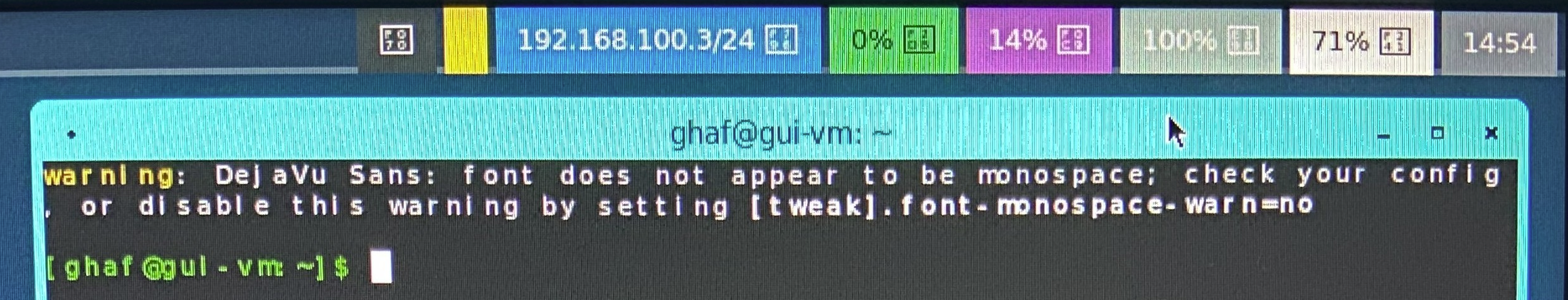

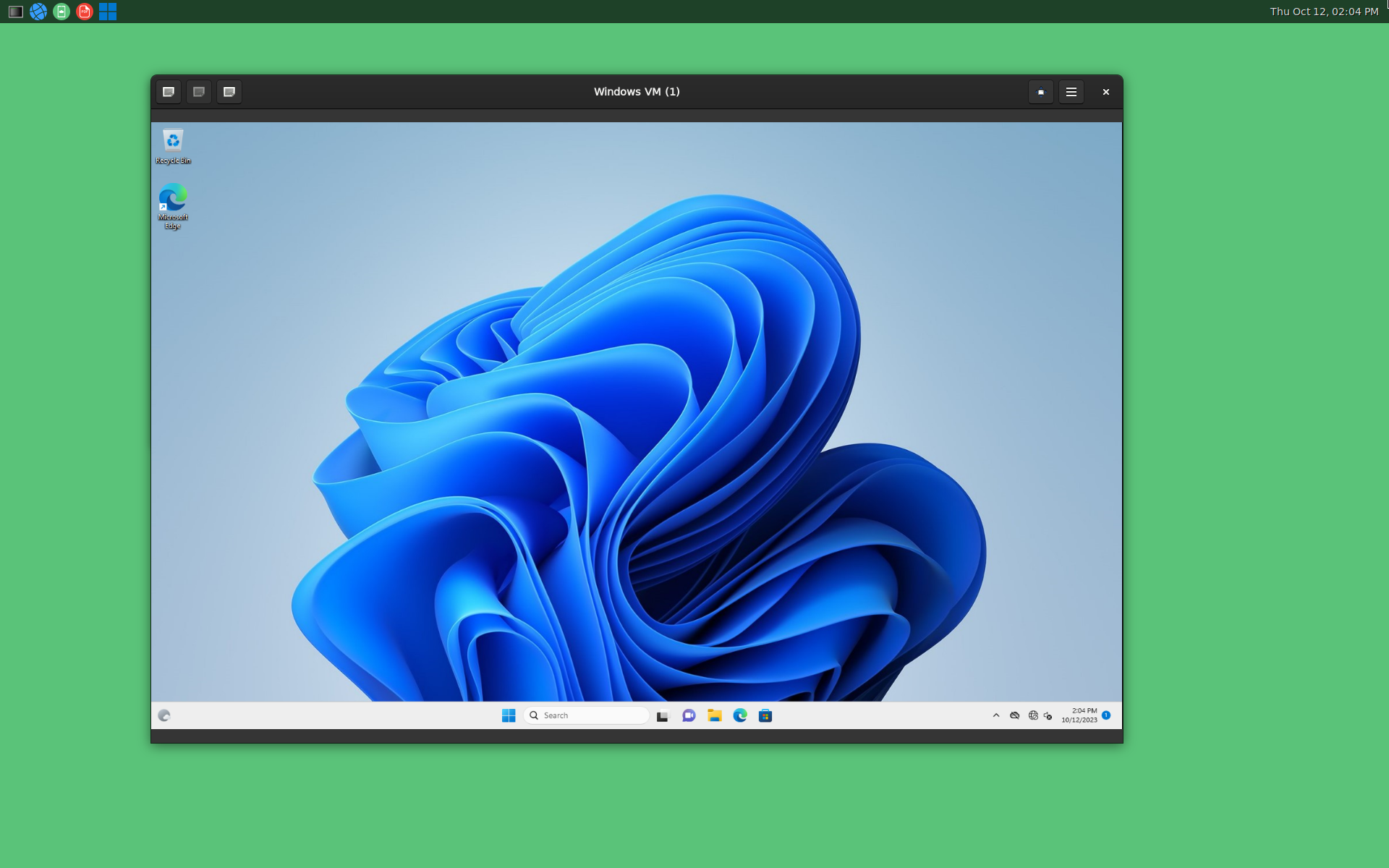

Ghaf demo desktop and applications are illustrated in the screen capture below:

Status

- ✅—integrated and tested in the

mainbranch. No known regression. - 🚧—prototyped or work in progress in the development branch.

- ❌—the feature has known regression or bugs.

Reference Devices

Orin—NVIDIA Jetson AGX Orin as the main reference device.x86—generic x86_64; tested on Intel NUC (Next Unit of Computing) or laptop.Lenovo X1—Lenovo X1 Carbon Gen 11 laptop.aarch64—generic AArch64; tested on an ARM server, laptop (e.g. Apple MacBook's), or NVIDIA Jetson AGX Orin.All variants—supported devices from Architectural Variants.

The following tables show the status of the Ghaf Platform features:

Release Builds and Hardware Architecture Support

| Feature | Status | Reference Device | Details |

|---|---|---|---|

| Ghaf in virtual machine | ✅ | x86 | nix run .#packages.x86_64-linux.vm-debug |

aarch64 reference image | ✅ | Orin | Based on Jetson Linux, OE4T and jetpack-nixos. |

aarch64 reference image | ✅ | imx8mp | Based on NXP BSP, implemented as nixos-hardware module |

x86 generic image | ✅ | x86 | Generic x86 computer, based on generic NixOS. NOTE: requires device specific configuration. |

Lenovo X1 reference image | ✅ | Lenovo X1 | x86_64 laptop computer, supports basic compartmentalized environment |

| Native build | ✅ | aarch64, x86 | Remote aarc64 nixos builders recommended |

| Cross-compilation | 🚧 | aarch64, riscv64 | Depends on NixOS nixpkgs 23.05 support for cross-compilation |

| CI builds | ✅ | All | Only main-branch, not for all PRs. |

| Emulated build | ❌ | aarch64 | binfmt, may freeze the build machine. Not recommended. See instructions. |

Development

| Feature | Status | Reference Device | Details |

|---|---|---|---|

| Quick target update | ✅ | all | nixos-rebuild --flake .#nvidia-jetson-orin-debug --target-host root@ghaf-host --fast switch |

aarch64 device flashing | ✅ | Orin | Full device software flashing using x86 machine |

| root filesystem flashing | ✅ | x86, imx8mp | dd image to bootable media - see |

| Debug: SSH | ✅ | Orin, x86 | Host access only in -debug-target, see authentication.nix |

| Debug: Serial | ✅ | all | Host access only in -debug-target - e.g. screen /dev/ttyACM0 115200 |

| Compartmentalized environment | 🚧 | Lenovo X1 | Net VM, GUI VM (with GPU passthrough) plus some App VMs |

Target Architecture

| Feature | Status | Reference Device | Details |

|---|---|---|---|

| Minimal host | 🚧 | all | See Minimal Host and PR #140. |

| Net VM | ✅ | Orin | See Net VM. Passthrough with Wi-Fi works but requires SSID/password configuration. |

| IDS VM | ✅ | Orin, Lenovo X1 | Defensive networking mechanism. |

| GUI VM | 🚧 | All, Lenovo X1 | Implemented for Lenovo X1 reference device, other devices have Wayland compositor running on the host. |

| App VM | 🚧 | All, Lenovo X1 | Implemented for Lenovo X1 reference device: Chromium, GALA and Zathura VMs. Requires GUI VM in place. |

| Admin VM | ✅ | All | Not started |

| Inter VM comms - IP-based | 🚧 | All | -debug-targets have network bridges to access VMs from host. |

| Inter VM comms - shared memory | 🚧 | All | |

| Inter VM Wayland | 🚧 | All | Currently it is waypipe over SSH, for test and demo purpose only. |

| SW update | 🚧 | All | A/B update tooling being evaluated. |

| USB passthrough | 🚧 | Orin | No reference implementation integrated yet. |

| PCI passthrough | ✅ | All | Used for reference in Net VM on Orin. |

| UART passthrough | 🚧 | Orin | See NVIDIA Jetson AGX Orin: UART Passthrough. Not integrated to any VM. |

| ARM platform bus devices passthrough | 🚧 | Orin | NVIDIA BPMP virtualization being developed |

Applications and VM Control

| Feature | Status | Reference Device | Details |

|---|---|---|---|

| Wayland-compositor | 🚧 | Orin, x86 | Implemented for Lenovo X1 |

| Chromium | 🚧 | Orin, x86 | Implemented for Lenovo X1 |

| Element | 🚧 | Orin, x86 | On host |

| Cloud Android (CVD) client application (GALA) | 🚧 | Orin, x86 | Implemented for Lenovo X1 |

| Virtualization control | 🚧 | All | See vmd design. |

Next Steps

See discussion for the outline of next steps

Architecture

The main architectural concept of the Ghaf Platform is to break up the traditional monolithic structure to modularized components, virtual machines (VM). These VMs on hardened host OS implement the Ghaf edge virtualization platform.

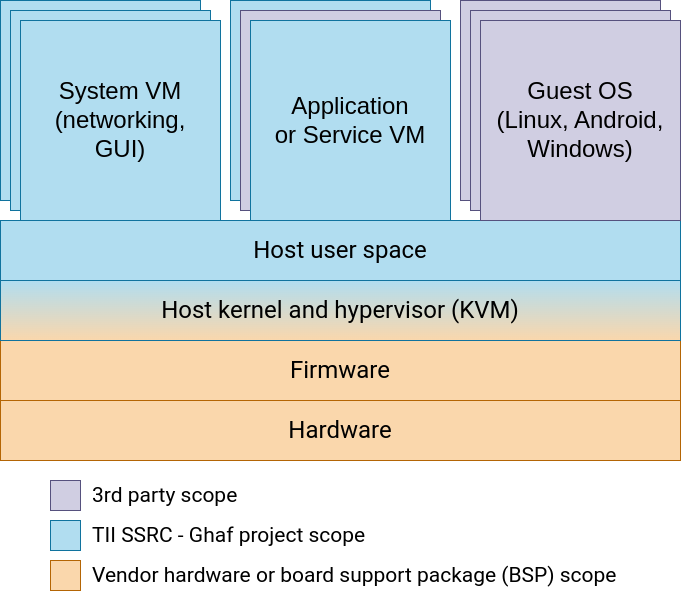

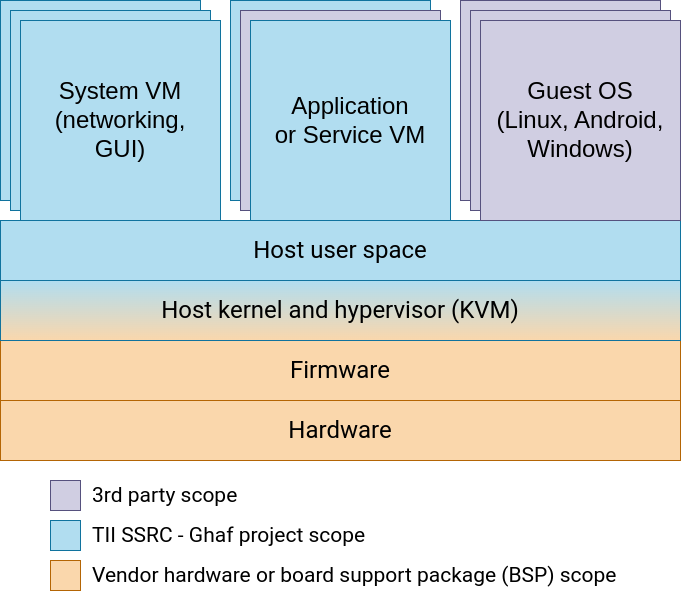

Embedded virtualization builds on cloud technologies in the development of end-to-end security. With hardware support for virtualization, we provide a hardened system of a small trusted computing base (TCB)—thin host—that enables isolation of use cases and their resources. Use cases are protected in guest virtual machines (VMs). Embedded targets are small devices (personal or headless) instead of high-performance cloud servers. Our scope is illustrated in the following diagram. For more information, see stack.

If you are interested in why we do something this way instead of that way, see Architecture Decision Records.

The Ghaf Platform components are used in reference configurations to build images for reference devices. For more information, see Reference Implementations.

In This Chapter

Architectural Variants

The main scope of the Ghaf platform is edge virtualization. However, to support modular development and testing of the platform, variants are supported with the following definitions:

-

Default

A default variant. Supports minimal host, GUI VM1 and netvm. May host other VMs. For more information, see Stack. -

Headless

A variant with minimal host and netvm. May host other VMs but does not have a GUI VM or graphics stack on a host. -

Host onlyA variant with minimal host only. A user can manually install software to a host, including VMs (if supported by hardware). -

No virtualizationA variant for hardware with no support for virtualization. May run any software, similar to popular Linux distributions, but cannot support guest virtual machines. May host any software deployed directly on a host.

| Variant Name | Headless | Graphics | VMs | Devices |

|---|---|---|---|---|

Default | No | GUI VM | Supported | Jetson, generic x86 |

Headless | Yes | No | Supported | Jetson, generic x86 |

Host Only | Yes | No | May be supported but not included | Jetson, generic x86 |

No Virtualization | Yes or no | Native on host | Not supported | Raspberry Pi, RISC-V |

-

As of early 2023, the graphics stack is deployed on a host to support application development. Work is ongoing to define the GUI VM and isolate graphics with GPU passthrough. ↩

Architecture Decision Records

Architecturally significant decisions are recorded as architecture decision records (ADR).

The Ghaf platform decision log:

| Decision Record | Status |

|---|---|

| Minimal Host | Proposed. |

| Networking VM | Proposed, partially implemented for development and testing. |

| IDS VM | Proposed, partially implemented for development and testing. |

| Platform Bus for Rust VMM | Proposed, WIP. |

To create an architectural decision proposal, open a pull request and use the decision record template. Contributions to the Ghaf architecture decisions are welcome.

Minimal Host

Status

Proposed.

Context

Ghaf uses the default NixOS configuration as a baseline to build the target image.

The default NixOS configuration is targeted for more general use with the inclusion of multiple packages that are not supporting the Ghaf design target of a minimal TCB to protect the host. Depending on the flexibility of the NixOS configuration, Ghaf minimal host may require new development to support the requirements.

This structure in the Ghaf host configuration imports the NixOS minimal profile which suits the minimal TCB better. Even better, the modular declarative profile enables the further optimization of the minimal TCB while supporting other profiles that suit the evaluation of other objectives such as feasibility studies of additional functionality, security and performance.

Requirements

Following table describes the development requirements of minimal host. All requirements originate from TII SSRC unless otherwise noted. Scope further defines:

- target configuration:

Rfor release,Dfor debug - architectural variant:

Vfor virtualization supporting variant,Afor all, includingNo Virtualization

Compliance states the progress of requirement compliance as follows:

Dfor Designed, design requirement from TII SSRC for analysis and evaluation.Ifor Implemented, design requirement met with possible, limitations documented under Consequences.Pfor Proposed, raised for discussion but not yet designed.Mfor Met, the requirement is reviewed and approved at technology readiness level 4.

| ID | Requirement | Description | Scope | Compliance |

|---|---|---|---|---|

| MH01 | Defined in nix | Host declaration in nix | R&D,A | I |

| MH02 | Reduced profile | Remove unnecessary | R, V | I |

| MH03 | No networking | Host has no networking | R, V | D |

| MH04 | No graphics | Host has no GUI stack | R, V | D |

| MH05 | No getty | Host has no terminal | R, V | P |

| MH06 | No nix tooling | Only /nix/store, no nix | R, V | P |

| MH07 | Minimal defconfig | Host kernel is minimized | R, V | D |

| MH08 | Update via adminvm | A/B update outside host | R, V | P |

| MH09 | Read-only filesystem | Mounted RO, integrity checked | R, V | P |

This list of requirements is not yet comprehensive and may be changed based on findings of further analysis as stated in the following section.

Decision

This ADR adopts a custom developed minimal profile using nixpkgs. It reduces both image and root partition size by eliminating the host OS content per requirements and implements a minimal TCB.

The current implementation of NixOS overridden. For more information on a minimal host profile, see minimal.nix.

With the progress of implementing the requirements, the minimal host customization will be illustrated.

Consequences

Defined in nix (MH01)

Ghaf minimal host module is implemented in nix modules.

Currently, host and VM declarations are implemented using microvm.nix but this is not strict requirement for ghaf release mode declarations if the limitations or dependencies of microvm.nix do not comply with other requirements. This may require separate release mode custom nix declarations to support flexibility with microvm.nix in debug mode.

Reduced profile (MH02)

Initial Ghaf minimal profile host size reduction is implemented with metrics on host total size and break down of size of the largest dependencies. Based on the metrics, further analysis is needed on several key modules including, but not limited to, kernel, systemd and nixos.

No networking (MH03)

Currently ghaf host profile for both release and debug target has networking. Requirement of no networking on release target requires declarative host configuration where:

- The release target host kernel is built without networking support. Networking must be enabled for debug target.

- The release target host user space has no networking tools nor configurations. Access to tools on host must be enabled for debug target.

To support development of configuration changes between release and debug target, the debug target must support networking. This also supports No Virtualization-variant development in which networking must be enabled.

The exception to no networking requirement is the virtual machine manager control socket from host to guest(s). The amount of required kernel configuration dependencies and impact to different VMMs must be further analyzed.

No networking has impact on how vmd adminvm to host communication is implemented. With no networking, shared memory is proposed.

No networking may have impact on how the guest-to-guest inter virtual machine communication configuration must implemented with VMMs. This must be further analyzed.

No graphics (MH04)

Ghaf minimal host profile for release target has no graphics. Graphics will be compartmentalized to GUI VM. All graphics and display output related components and dependencies, including kernel drivers, must be removed from kernel configuration. Those are to be passed through to GUI VM.

No getty (MH05)

Ghaf host in release mode must have no terminals (TTYs) to interact with. In the current state of development, this cannot be enabled yet and has minimum requirement of system logging outside the host. Proposed design to approach this is requirement is to enable getty declaratively only in a debug serial terminal under modules/development.

No nix toolings (MH06)

Ghaf host in release mode has no nix tooling to work with the /nix/store. The /nix/store is only used to build the host system. In release mode, no modifications to nix store are possible. Changes are handled with update (MH08).

Ghaf host in debug mode must support nix tooling via read-writable host filesystem. This must be taken into account in build-time nix module declarations.

Minimal defconfig (MH07)

Ghaf host release mode kernel configuration must be minimal and hardened in the limits of HW vendor BSP. Kernel configuration per device is to be further analyzed iteratively. Limitations are to be documented per target device kernel configurations and HW support for virtualization.

Update via adminvm (MH08)

Ghaf host release mode filesystem updates are to be implemented using A/B update mechanism from adminvm. This will be designed and covered in a separate ADR.

Read-only filesystem (MH09)

Ghaf minimal host in release mode must be implemented with read-only, integrity checked (dm-verity) filesystem.

Networking Virtual Machine

Status

Proposed, partially implemented for development and testing.

Networking VM (Net VM) reference declaration is available at microvm/netvm.nix.

Context

Ghaf's high-level design target is to secure a monolithic OS by modularizing the OS to networked VMs. The key security target is to not expose the trusted host directly to the Internet. This isolates the attack surface from the Internet to Net VM.

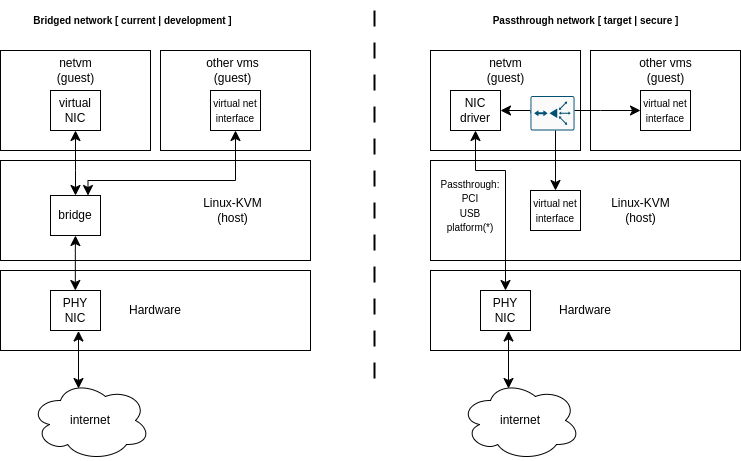

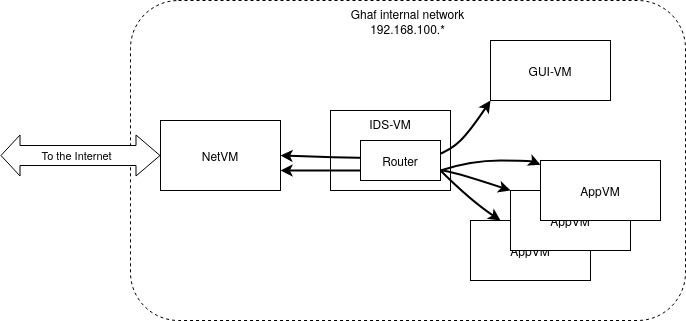

The following context diagram illustrates development and secure scenarios:

Left: An insecure development scenario. The host is directly connected to the Internet, and the network is bridged from the host to other parts of the system.

Right: A secure scenario. The network is passed through to Net VM and routed to other parts of the system.

Decision

The development scenario simplifies the target system network access and configuration. This ADR proposes the development Net VM configuration is maintained to support system development.

The secure scenario is proposed to be implemented with the use of passthrough to DMA and remap the host physical network interface card (PHY NIC) to Net VM. This cannot be generalized for all hardware targets as it requires:

- Low-level device tree configuration for bootloader and host (at least on platform NIC).

- VMM host user space NIC bus mapping from the host to Net VM.

- Native network interface driver (not virtual) in Net VM. Native driver is bound the vendor BSP supported kernel version.

These depend on the hardware setup. The proposed target setup is that the passthrough network device(s) are implemented as declarative nix-modules for easier user hardware-specific configuration. In practice, a user may configure the declaration of a PCI or USB network card that is available to the available hardware setup.

Net VM will provide a dynamic network configuration:

- A DHCP server for Net VM to provide IP addresses for the other parts of the system, both static and dynamic.

- Routing from Net VM to the Internet and/or Inter VM.

For common reference hardware with platform NIC, the configured modules for network interface passthrough are provided. For more information, see i.MX 8QM Ethernet Passthrough.

Details of other network components, such as default firewall rules, DHCP (static and dynamic client addresses), routing, reverse proxies and security monitoring are to be described in their respective architecture decision records. In this context, these are illustrated in the context diagram on the right side of the Net VM network interface driver.

Consequences

Isolating the attack surface from the host to networking-specific guest VM makes it easier to protect the critical host system from compromise. The isolation also makes it easier to deploy further security, such as a zero trust policy engine or intrusion detection system (IDS).

Isolation makes configuration and comprehension of the system more difficult.

Intrusion Detection System Virtual Machine

Status

Proposed, partially implemented for development and testing.

Intrusion Detection VM (IDS VM) reference declaration will be available at microvm/idsvm.nix.

Context

Ghaf's high-level design target is to secure a monolithic OS by modularizing the OS to networked VMs. The key security target is to detect intrusions by analyzing the network traffic in the internal network of the OS.

Decision

The main goal is to have a networking entity in Ghaf's internal network so that all network traffic goes through that entity. Traffic then can be analyzed to detect possible intrusions in inter VM communication and outgoing network traffic (from VM to the Internet). This goal is achieved by introducing a dedicated VM and routing all networking from other VMs to go through it. Then it is possible to use various IDS software solutions in IDS VM to detect possible suspicious network activities.

Consequences

A dedicated IDS VM provides a single checkpoint to detect intrusions and anomalies in the internal network of the OS and to initiate required countermeasures.

Routing and analyzing the network traffic in a separate VM will reduce network performance.

References

rust-vmm—Bus Passthrough Support for Rust VMMs

Status

Proposed, work in progress.

Context

This ADR is a work-in-progress note for Ghaf bus passthrough implementation that will support rust-vmm-based hypervisors.

note

rust-vmm is an open-source project that empowers the community to build custom Virtual Machine Monitors (VMMs) and hypervisors. For more information, see https://github.com/rust-vmm/community.

It is crucial to have bus devices passthrough support for ARM-based hardware as the bus is mainly used to connect the peripherals. Nowadays, the only hypervisor with some support for Platform bus is QEMU but the code is dated 2013 and not frequently used.

On the other hand, one of the target hardware devices for Ghaf is NVIDIA Orin with an ARM core. To achieve Ghaf's security and hardware isolation goals, devices should support passthrough mode. Production-ready rust-vmm-based hypervisors (crosvm, Firecracker, Cloud Hypervisor) do not have support for Platform bus.

Decision

Implementation of Platform bus passthrough is a base framework for Rust VMM. This will make it possible to use this mode within production-ready rust-vmm-based hypervisors. The main candidate here is crosvm. The necessity to support Platform bus in other hypervisors is subject to discussion. Technically, the Platform bus is rather a simple bus: it manages memory mapping and interrupts. Information about devices is not dynamic but is read from the device tree during the boot stage.

The current status:

| Required Components | Status of Readiness |

|---|---|

| Host kernel side: | |

| VFIO drivers (to substitute real driver in host kernel) | -/+ |

| Host support for device trees | + |

| Guest kernel side: | |

| Device drivers for passthrough devices | + |

| Guest support for device trees | + |

| Rust VMM side: | |

| Bus support | Needs to be developed. |

| VMM support for device trees | Rudimental, needs improvement. |

Hardening

This section describes how securing Ghaf by reducing its attack surface—hardening—is done.

Kernel

Ghaf has two types of kernels: host and guest. Hardening of these kernels varies in terms of hardware support and functionality required by the guest kernel in question. Within this context, the kernel always refers to the Linux operating system kernel.

Kernel Hardening Process

NixOS provides several mechanisms to customize the kernel. The main methods are:

-

Declaring kernel custom configuration: usage in Ghaf.

Example of entering the kernel development shell to customize the

.configand build it:~/ghaf $ nix develop .#devShells.x86_64-linux.kernel-x86 ... [ghaf-kernel-devshell:~/ghaf/linux-6.6.7]$ cp ../modules/hardware/x86_64-generic/kernel/configs/ghaf_host_hardened_baseline .config [ghaf-kernel-devshell:~/ghaf/linux-6.6.7]$ make menuconfig ... [ghaf-kernel-devshell:~/ghaf/linux-6.6.7]$ make -j$(nproc) ... Kernel: arch/x86/boot/bzImage -

Booting the built kernel with QEMU:

[ghaf-kernel-devshell:~/ghaf/linux-6.6.7]$ qemu-system-x86_64 -kernel arch/x86/boot/bzImage -

Validating with kernel hardening checker:

[ghaf-kernel-devshell:~/ghaf/linux-6.6.7]$ cp ../modules/hardware/x86_64-generic/kernel/configs/ghaf_host_hardened_baseline .config [ghaf-kernel-devshell:~/ghaf/linux-6.6.7]$ HS=../modules/hardware/x86_64-generic/kernel/host/configs GS=../modules/hardware/x86_64-generic/kernel/guest/configs [ghaf-kernel-devshell:~/ghaf/linux-6.6.7]$ ./scripts/kconfig/merge_config.sh .config $HS/virtualization.config $HS/networking.config $HS/usb.config $HS/user-input-devices.config $HS/debug.config $GS/guest.config $GS/display-gpu.config [ghaf-kernel-devshell:~/ghaf/linux-6.6.7]$ kernel-hardening-checker -c .config [+] Kconfig file to check: .config [+] Detected microarchitecture: X86_64 [+] Detected kernel version: 6.6 [+] Detected compiler: GCC 120300 ... [+] Config check is finished: 'OK' - 188 / 'FAIL' - 8 [ghaf-kernel-devshell:~/ghaf/linux-6.6.7]$ kernel-hardening-checker -c .config| grep 'FAIL: ' CONFIG_CFI_CLANG |kconfig| y | kspp | self_protection | FAIL: is not found CONFIG_CFI_PERMISSIVE |kconfig| is not set | kspp | self_protection | FAIL: CONFIG_CFI_CLANG is not "y" CONFIG_MODULES |kconfig| is not set | kspp |cut_attack_surface| FAIL: "y" CONFIG_KCMP |kconfig| is not set | grsec |cut_attack_surface| FAIL: "y" CONFIG_FB |kconfig| is not set |maintainer|cut_attack_surface| FAIL: "y" CONFIG_VT |kconfig| is not set |maintainer|cut_attack_surface| FAIL: "y" CONFIG_KSM |kconfig| is not set | clipos |cut_attack_surface| FAIL: "y" CONFIG_TRIM_UNUSED_KSYMS |kconfig| y | my |cut_attack_surface| FAIL: "is not set"

Host Kernel

The host kernel runs on bare metal. The kernel is provided either with Linux upstream (x86_64) or with vendor BSP. The default Ghaf host kernel on x86_64 is maintained by Ghaf upstream package sources nixpkgs or Nix-packaged hardware-specific BSP (for example, NVIDIA Jetson-family of devices).

x86-64-linux

The host kernel hardening is based on Linux make tinyconfig. The

default tinyconfig fails to assertions on NixOS without

modifications. Assertions are fixed in the ghaf_host_hardened_baseline Linux configuration under Ghaf

modules/hardware/x86_64-generic/kernel/configs. Resulting baseline

kernel configuration is generic for x86_64 hardware architecture devices.

In addition, NixOS (Ghaf baseline dependency) requires several kernel modules that are added to the config or ignored with allowMissing = true. As of now, the kernel builds and early boots on Lenovo X1.

Virtualization Support

The host Virtualization support will add the required kernel config dependency to the Ghaf baseline by which NixOS has virtualization enabled. It can be enabled with the following flag ghaf.host.kernel_virtualization_hardening.enable for Lenovo X1.

Networking Support

The host Networking support will add the required kernel config dependency to the Ghaf baseline by which NixOS has networking enabled, It can be enabled with the following flag ghaf.host.kernel_networking_hardening.enable for Lenovo X1.

USB Support

USB support on host is for the -debug-profile builds, not for hardened host -release-builds. As of now, USB support needs to be enabled when debug support to host via USB ethernet adapter (Lenovo X1) is needed or when the user want to boot Ghaf using an external SSD. It is optional in case Ghaf is used with internal NVME.

It can be enabled with the following flag ghaf.host.kernel_usb_hardening.enable for Lenovo X1.

User Input Devices Support

The User Input Devices support will add the required kernel config dependency to the Ghaf baseline by which NixOS has user input devices enabled. It can be enabled with the following flag ghaf.host.kernel_inputdevices_hardening.enable for Lenovo X1.

To enable GUI, set Virtualization, Networking and User Input Devices support. As of now, the kernel builds and can boot on Lenovo X1.

Debug Support

The Debug support on host is for the -debug-profile builds, not for hardened host -release-builds. It can be helpful when debugging functionality is needed in a development environment.

It can be enabled with the following flag ghaf.host.kernel.debug_hardening.enable for Lenovo X1.

Guest Support

The Guest support will add the required kernel config dependency to the Ghaf baseline by which NixOS has guest enabled. The added functionality is vsock for host-to-guest and guest-to-guest communication.

It can be enabled with the following flag guest.hardening.enable for Lenovo X1.

Guest Graphics Support

The Guest Graphics support will add the required kernel config dependency to the Ghaf baseline by which NixOS has guest graphics enabled. The added functionality is for guest with graphics support enabled.

It can be enabled with the following flag guest.graphics_hardening.enable for Lenovo X1.

Secure Boot

This section describes Secure Boot and how to create secure keys.

The reader is expected to know the fundamentals of UEFI and have a basic understanding of Secure Boot UEFI specification.

Enabling Secure Boot

TODO: This needs to be filled later with UKI description.

Creating Secure Boot Keys

Secure Boot keys can be created with sbctl, a Secure Boot Manager. sbctl is available in Nixpkgs as pkgs.sbctl.

After you installed sbctl or entered a Nix shell, use the following command to create your Secure Boot keys:

$ sudo sbctl create-keys

Using "sudo sbctl create-keys" command user can create secure keys on the trusted system.

Current Implementation

For demonstration purposes, we use pre-generated secure keys which are unsecure as whoever has keys can break into the system. Currently, the Secure Boot feature is enabled in debug builds only, since secure key creation requires sudo rights.

Secure Boot Verification

- TODO: this needs to be filled.

Stack

The Ghaf stack includes a host with VMs. The host consists of two layers—OS kernel with hypervisor and OS user space—and provides virtualization for the guest VMs: system VMs, application or service VMs, or guest OSs. The stack layers and top-level components are illustrated in the following diagram.

At the lowest levels of the stack lay hardware vendor-provided hardware, firmware, and board support package. The Ghaf project aims to use the vendor-provided components either as they are or configure them as supported by the vendor. Configuration may include, for example, host kernel hardening and including only selected components from the vendor BSP.

The Ghaf project provides the reference minimal host with user space as defined in the Minimal Host.

System VMs

Ghaf provides reference system VMs for networking, GUI and storage.

| System VM | Defined | Implementation Status |

|---|---|---|

| Networking | Yes | Partial |

| GUI (optional) | No | Reference Wayland on host, to be isolated to VM |

GUI VM is considered optional as it may not be needed in some headless configurations.

Application or Service VM

Ghaf should provide reference application VMs and service VMs that isolate respective software from the host. Depending on the use case requirements, these VMs will communicate with other parts of the system over networking and shared memory. As an example, application VMs (Wayland client) will communicate with the GUI VM (Wayland compositor) across the VM boundaries. This is called cross-domain Wayland. Another, already partially implemented area is networking VM that will securely provide Internet access to other VMs.

Guest OSs

Ghaf aims to support users with guest OSs such as other Linux distributions (Ubuntu, Fedora, etc.), Windows, and Android. Some of these have been already prototyped.

Contribution Guidelines

Improvements to code and documentation are welcome! We would love to get contributions from you. For more information, see CONTRIBUTING.md.

Some things that will increase the chance that your pull request is accepted faster:

- Spelling tools usage.

- Following our Style Guide.

- Writing a good commit message.

Reference Implementations

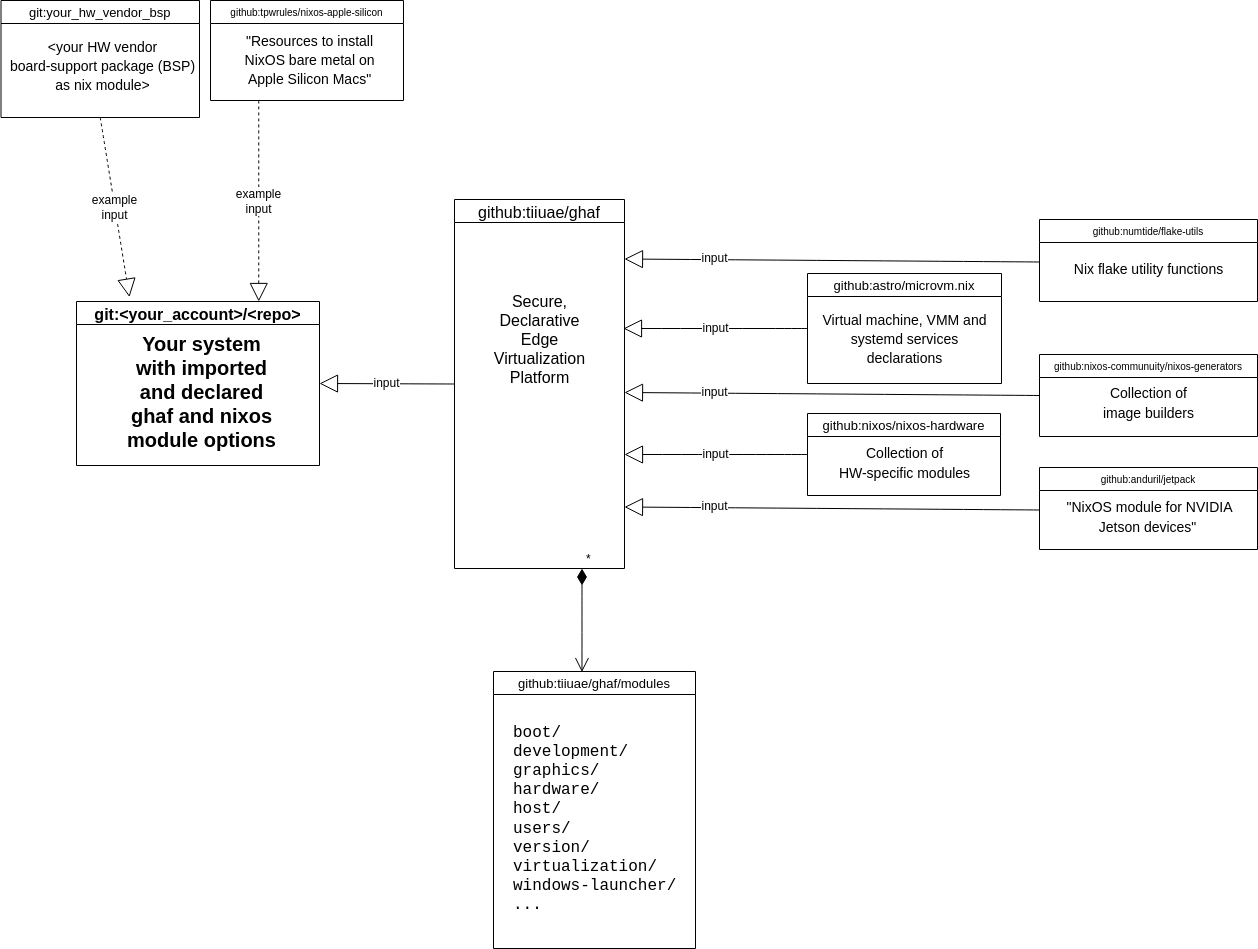

Our hardened OS targets are build configurations based on NixOS. The canonical URL for the upstream Nix git repository is: https://github.com/NixOS.

Build configurations define our dependencies and configuration changes to packages and build mechanisms of NixOS. If you want to try Ghaf, see Build and Run.

Approach

A build configuration is a target to build the hardened OS for a particular hardware device. Most packages used in a build configuration come from nixpkgs—NixOS Packages collection.

The upstream first approach means we aim the fix issues by contributing to nixpkgs. At the same time, we get the maintenance support of NixOS community and the benefits of the Nix language on how to build packages and track the origins of packages in the software supply chain security. For more information, see Supply Chain Security.

NixOS, a Linux OS distribution packaged with Nix, provides us with:

- generic hardware architecture support (

x86-64andAArch64) - declarative and modular mechanism to describe the system

- Nix packaging language mechanisms:

Even when unmodified upstream is often preferred, even ideal, to ensure timely security updates from upstream—customizations are sometimes required.

Example

To support a reference board without a vendor board support package (BSP)—bootloader, kernel, device drivers—is often not feasible. With this approach, we can overlay the generic NixOS Linux kernel with the vendor kernel and add a vendor bootloader to build a target image.

Often the vendor BSPs are also open source but sometimes contain unfree binary blobs from the vendor's hardware. Those are handled by allowing unfree - if the user agrees with the end-user license agreement (EULA). If not, unfree support can be dropped along with that part of the BSP support.

The same goes with the architectural variants as headless devices or end-user devices differ in terms what kind of virtual machines (VM) they contain. The user needs graphics architecture and VM support for the user interface (UI) whereas a headless device is more like a small server without the UI.

In This Chapter

Development

Ghaf Framework is free software, currently under active development.

The scope of target support is updated with development progress:

- Build and Run

- Running Remote Build on NixOS

- Installer

- Cross-Compilation

- Creating Application VM

- Hardware Configuration

- Profiles Configuration

- labwc Desktop Environment

- IDS VM Further Development

- systemd Service Hardening

Once you are up and running, you can participate in the collaborative development process by building a development build with additional options. For example, with the development username and password that are defined in accounts.nix.

If you authorize your development SSH keys in the ssh.nix module and rebuild Ghaf for your target device, you can use nixos-rebuild switch to quickly deploy your configuration changes to the target device over the network using SSH. For example:

nixos-rebuild --flake .#nvidia-jetson-orin-agx-debug --target-host root@<ip_address_of_ghaf-host> --fast switch

...

nixos-rebuild --flake .#lenovo-x1-carbon-gen11-debug --target-host root@<ip_address_of_ghaf-host> --fast switch

...

tip

With the -debug targets, the debug ethernet is enabled on host. With Lenovo X1 Carbon, you can connect USB-Ethernet adapter for the debug and development access.

Pull requests are the way for contributors to submit code to the Ghaf project. For more information, see Contribution Guidelines.

Build and Run

This tutorial assumes that you already have basic git experience.

The canonical URL for the upstream Ghaf git repository is https://github.com/tiiuae/ghaf. To try Ghaf, you can build it from the source.

warning

Cross-compilation support is currently under development and not available for the building process.

Prerequisites

First, follow the basic device-independent steps:

- Clone the git repository https://github.com/tiiuae/ghaf.

- Ghaf uses a Nix flake approach to build the framework targets, make sure to:

- Install Nix or full NixOS if needed: https://nixos.org/download.html.

- Enable flakes: https://nixos.wiki/wiki/Flakes#Enable_flakes.

To see all Ghaf-supported outputs, type

nix flake show. - Set up an AArch64 remote builder: https://nixos.org/manual/nix/stable/advanced-topics/distributed-builds.html.

Then you can use one of the following instructions for the supported targets:

| Device | Architecture | Instruction |

|---|---|---|

| Virtual Machine | x86_64 | Running Ghaf Image for x86 VM (ghaf-host) |

| Generic x86 Сomputer | x86_64 | Running Ghaf Image for x86 Computer |

| Lenovo X1 Carbon Gen 11 | x86_64 | Running Ghaf Image for Lenovo X1 |

| NVIDIA Jetson AGX Orin | AArch64 | Ghaf Image for NVIDIA Jetson Orin AGX |

| NXP i.MX 8MP-EVK | AArch64 | Building Ghaf Image for NXP i.MX 8MP-EVK |

| MICROCHIP icicle-kit | RISCV64 | Building Ghaf Image for Microchip Icicle Kit |

Running Ghaf Image for x86 VM (ghaf-host)

Before you begin, check device-independent prerequisites.

From the ghaf source directory, run the nix run .#packages.x86_64-linux.vm-debug command.

This creates ghaf-host.qcow2 copy-on-write overlay disk image in your current directory. If you do unclean shutdown for the QEMU VM, you might get weird errors the next time you boot. Simply removing ghaf-host.qcow2 should be enough. To cleanly shut down the VM, from the menu bar of the QEMU Window, click Machine and then Power Down.

Running Ghaf Image for x86 Computer

Before you begin, check device-independent prerequisites.

Do the following:

- To build the target image, run the command:

nix build github:tiiuae/ghaf#generic-x86_64-debug - After the build is completed, prepare a USB boot media with the target image you built using the

flash.shscript:./packages/flash/flash.sh -d /dev/<YOUR_USB_DRIVE> -i result/<IMAGE_NAME> - Boot the computer from the USB media.

Running Ghaf Image for Lenovo X1

Lenovo X1 is the reference x86_64 device for the Ghaf project.

Do the following:

- To build the target image, run the command:

nix build github:tiiuae/ghaf#lenovo-x1-carbon-gen11-debug - After the build is completed, prepare a USB boot media with the target image you built using the

flash.shscript:./packages/flash/flash.sh -d /dev/<YOUR_USB_DRIVE> -i result/<IMAGE_NAME> - Boot the computer from the USB media.

Ghaf Image for NVIDIA Jetson Orin AGX

Before you begin:

- Check device-independent prerequisites.

- If you use a new device, flash bootloader firmware first. Then you can build and run a Ghaf image.

Flashing NVIDIA Jetson Orin AGX

-

Run the command:

nix build github:tiiuae/ghaf#nvidia-jetson-orin-agx-debug-flash-scriptThe above command is for AGX 32GB version. For the AGX 64GB RAM version please use:

nix build github:tiiuae/ghaf#nvidia-jetson-orin-agx64-debug-flash-scriptIt will build the Ghaf image and bootloader firmware, and prepare the flashing script. Give "yes" answers to all script questions. The building process takes around 1,5 hours.

-

Set up the following connections:

- Connect the board to a power supply with a USB-C cable.

- Connect a Linux laptop to the board with the USB-C cable.

- Connect the Linux laptop to the board with a Micro-USB cable to use serial interface.

For more information on the board's connections details, see the Hardware Layout section of the Jetson AGX Orin Developer Kit User Guide.

-

After the build is completed, put the board in recovery mode. For more information, see the Force Recovery Mode section in the Jetson AGX Orin Developer Kit User Guide.

-

Run the flashing script:

sudo ~/result/bin/flash-ghaf-hostThere is a time-out for this operation, so run the script within one minute after putting the device in recovery mode. If you got the error message "ERROR: might be timeout in USB write.":

- Reboot the device and put it in recovery mode again.

- Check with the

lsusbcommand if your computer can still recognize the board, and run the flash script again.

-

Restart the device after flashing is done.

Building and Running Ghaf Image for NVIDIA Jetson Orin AGX

After the latest firmware is flashed, it is possible to use a simplified process by building only the Ghaf disk image and running it from external media:

- To build the target image, run the command:

The above command is for AGX 32GB version. For the AGX 64GB RAM version please use:nix build github:tiiuae/ghaf#nvidia-jetson-orin-agx-debug-from-x86_64nix build github:tiiuae/ghaf#nvidia-jetson-orin-agx64-debug - After the build is completed, prepare a USB boot media with the target image you built using the

flash.shscript:./packages/flash/flash.sh -d /dev/<YOUR_USB_DRIVE> -i result/sd-image/<IMAGE_NAME> - Boot the hardware from the USB media.

In the current state of Ghaf, it is a bit tricky to make NVIDIA Jetson Orin AGX boot Ghaf from a USB if the same thing has already been flashed on the boards's eMMC. To succeed, you can change partition labels on eMMC (or optionally wiping everything away by formatting):

- Log in as a root:

sudo su - Check the current labels:

lsblk -o name,path,fstype,mountpoint,label,size,uuid - Change the ext4 partition label:

e2label /dev/mmcblk0p1 nixos_emmc - Change the vfat partition label:

dosfslabel /dev/mmcblk0p2 ESP_EMMC - Verify the labels that were changed:

lsblk -o name,path,fstype,mountpoint,label,size,uuid - After these changes NVIDIA Jetson Orin AGX cannot boot from its internal eMMC. It will boot from the USB device with the correct partition labels.

Building Ghaf Image for NXP i.MX 8MP-EVK

Before you begin, check device-independent prerequisites.

In the case of i.MX8, Ghaf deployment consists of creating a bootable SD card and USB media with the Ghaf image:

-

To build and flash the Ghaf image:

- Run the

nix build .#packages.aarch64-linux.imx8mp-evk-releasecommand. - Prepare the USB boot media with the target HW image you built:

./packages/flash/flash.sh -d /dev/<YOUR_USB_DRIVE> -i result/<IMAGE_NAME>.

- Run the

-

Insert an SD card and USB boot media into the board and switch the power on.

Building Ghaf Image for Microchip Icicle Kit

Before you begin:

- Check device-independent prerequisites.

- Make sure HSS version 0.99.35-v2023.02 is programmed in your board eNVM. The version can be seen in the pre-bootloader log. Check the video guide to build HSS and program the eNVM: How to build HSS and program the eNVM?

In the case of the Icicle Kit, Ghaf deployment consists of creating an SD image with U-Boot and Linux kernel from Microchip, and Ghaf-based NixOS rootfs:

-

Build a Ghaf SD image:

a. Run the nix build .#packages.riscv64-linux.microchip-icicle-kit-release command to release the image. b. Run the nix build .#packages.riscv64-linux.microchip-icicle-kit-debug command to debug the image.

-

Flash the Ghaf SD image:

-

If you want to use a SD card:

- Prepare the SD card with the target HW image you built:

./packages/flash/flash.sh -d /dev/<YOUR_SD_CARD> -i result/<IMAGE_NAME>. - Insert an SD card into the board and switch the power on.

- Prepare the SD card with the target HW image you built:

-

If you want to use the onboard MMC:

- You can directly flash a NixOS image to an onboard MMC card:

./packages/flash/flash.sh -d /dev/<YOUR_MMC_DEVICE> -i result/<IMAGE_NAME>.

- You can directly flash a NixOS image to an onboard MMC card:

-

For more information on how to access the MMC card as a USB disk, see MPFS Icicle Kit User Guide.

Running Remote Build on NixOS

To set up a remote build on NixOS:

- Identify required SSH keys for remote SSH connection.

- Set up configurations.

If you hit an issue, check Troubleshooting.

1. Configuring SSH Keys

important

This step assumes that public SSH keys were generated and copied (ssh-copy-id) both for normal and root users. For more information, see Setting up public key authentication.

Before you begin, make sure an SSH connection is established to the remote host for both normal and root users:

ssh USER@IP_ADDRESS_OF_REMOTE_MACHINE

nix store ping --store ssh://USER@REMOTE_IP_ADDRESS

1.1. [Local Machine] Configuring SSH Keys

Do the following on a local machine:

-

Change directory to Home Directory with SSH:

cd .sshThe public keys of the remote machine are located in the known_hosts file. These keys are created and configured after the ssh-copy-id command. Make sure the keys are there. If they are not there:

- Access the remote machine.

- Run

cd /etc/ssh. - Retrieve and copy the public keys.

- Go back to the local machine and paste them into known_hosts.

-

Navigate to the

/etc/ssh/directory:cd /etc/sshMake sure the ssh_known_hosts file contains the same public keys as the remote machine (same as

.ssh/knwon_hosts). Otherwise, specify it in theconfiguration.nixfile. -

Use CMD as the root user:

sudo -i -

Make sure the root user’s keys are different from the user’s keys:

cd .ssh.sshis a user-level access and/etc/sshis system-wide.

1.2. Accessing Remote Machine Using SSH

Do the following:

-

Navigate the authorized_keys file:

ssh USER@IP_ADDRESS_OF_REMOTE_MACHINE cd .ssh sudo nano authorized_keys -

Make sure that both user and root public keys for the local machine are located there:

- The user’s public key can be obtained from

/home/username/.ssh/id_rsa.pub. - The root user's public key can be obtained from

/root/.ssh/id_rsa.pub.

- The user’s public key can be obtained from

2. Setting Up Configuration Files

2.1. [Local Machine] Setting Up Configuration Files

Do the following on a local machine:

- Set configuration variables in

configuration.nixandnix.conf:- Use the following commands:

cd /etc/nixos sudo nano configuration.nix - Add lib in the header like so:

{ config, pkgs, lib, ... }:. - Edit the

nix.conffile:

For more information, see the nix.conf section of the Nix Reference Manual.environment.etc."nix/nix.conf".text = lib.mkForce '' # Your custom nix.conf content here builders = @/etc/nix/machines require-sigs = false max-jobs = 0 # to use remote build by default not local substituters = https://cache.nixos.org/ trusted-public-keys = cache.nixos.org-1:6pb16ZPMQpcDShjY= cache.farah:STwtDRDeIDa... build-users-group = nixbld trusted-users = root farahayyad experimental-features = nix-command flakes ''; - Rebuild NixOS by running:

sudo nixos-rebuild switch

- Use the following commands:

- Create or set the machines file:

-

Use the following commands:

cd /etc/nixos sudo nano machines -

Specify the SSH settings:

[ssh://]USER@HOST target-spec [SSH identity file] [max-jobs] [speed factor] [supported-features] [mandatory-features] [ssh-public-key-encoded]- Parameters inside ‘[ ]’ are optional.

- The ssh-public-key-encoded is the base-64 encoded public key of the remote machine. Get the encoding using:

echo -n "your_public_key_here" | base64 - If omitted, SSH will use its regular known_hosts file.

For more information, see the Remote Builds section of the Nix Reference Manual.

-

2.2. [Remote Machine] Setting Up Configuration Files

Do the following on a remote machine:

- Specify the sshd_config settings:

- Use the following commands:

cd /etc/ssh sudo nano sshd_config - Make sure PubkeyAuthentication is set as yes.

- Use the following commands:

- Specify the

/etc/nix/nix.confsettings:- Use the following commands:

cd /etc/nix sudo nano nix.conf - Edit the

nix.conffile:trusted-public-keys = cache.nixos.org-1:61o0gWypbMrAURk... build-users-group = nixbld require-sigs = false trusted-users = root farahayyad jk binary-caches = https://cache.nixos.org/ substituters = https://cache.nixos.org/ system-features = nixos-test benchmark big-parallel kvm binary-cache-public-keys = cache.nixos.org-1:6NCHD59X43... experimental-features = nix-command flakes - Run the following command to restart daemon and update all the preceding changes:

systemctl restart nix-daemon.service

- Use the following commands:

Troubleshooting

- Single-User Nix Installation Issues

- VPN Setup for Remote Access

- Private Key on Local Machine Not Matching Public Key on Remote Machine

Single-User Nix Installation Issues

This issue typically arises when Nix is installed in a single-user mode on the remote machine, which can create permission issues during multi-user operations.

If an operation fails with the following error message:

could not set permissions on '/nix/var/nix/profiles/per-user' to 755: Operation not permitted

reinstall Nix in a multi-user setup:

-

Uninstall Nix using a single-user mode:

rm -rf /nix -

Install Nix in a multi-user mode:

sh <(curl -L https://nixos.org/nix/install) --daemon

For more information about Nix security modes, see the Security section of the Nix Reference Manual.

VPN Setup for Remote Access

A VPN is needed, if the local machine is not on the same local network as your remote build machine.

To set up a VPN using OpenConnect, do the following:

- Install OpenConnect:

nix-env -iA nixos.openconnect

- Establish a VPN connection:

sudo openconnect --protocol=gp -b access.tii.ae

- Once authenticated, you establish a secure connection to your network. Use

ssh USER@IP_ADDRESS_OF_REMOTE_MACHINEto check if it is possible to connect to the remote machine.

Private Key on Local Machine Not Matching Public Key on Remote Machine

Using mismatched key pairs could result in the Permission denied error.

Ensure and double-check that you are using the right key pairs.

If you choose to use/present your local’s RSA private key, make sure that it is the corresponding RSA public key that is in the remote’s authorized_file, not the ED25519 or ECDSA public keys.

Installer

Configuring and Building Installer for Ghaf

You can obtain the installation image for your Ghaf configuration.

In addition to the live USB image that Ghaf provides it is also possible to install Ghaf. This can either be achieved by downloading the desired image or by building it as described below.

Currently, only x86_64-linux systems are supported by the standalone installer.

To build, for example, the debug image for the Lenovo x1, use the following command:

nix build .#lenovo-x1-carbon-gen11-debug-installer

Flashing Installer

Once built you must transfer it to the desired installation media. It requires at least a 4GB SSD, at the time of writing.

sudo dd if=./result/iso/ghaf-<version>-x86_64-linux.iso of=/dev/<SSD_NAME> bs=32M status=progress; sync

Installing Image

caution

This operation is destructive and will overwrite your system.

Insert the SSD into the laptop, boot, and select the option to install.

Then use the following command:

sudo ghaf-install.sh

Check the available options shown in the prompt for the install target. Mind that the /dev/sdX is likely the install medium.

Once entered, include /dev and press [Enter] on the keyboard to complete the process.

sudo reboot

Remove the installer drive.

Cross-Compilation

warning

Cross-compilation is currently under development and cannot be used properly on all the supported device configurations.

Ghaf is targeted at a range of devices and form factors that support different instruction set architectures (ISA). Many small form-factor edge devices are not powerful enough to compile the needed applications or OSs that run on them. As the most common ISA used in desktops and servers is x_86, this will generally require that the code is cross-compiled for target ISA e.g. AArch64 or RISC-V.

NixOS and Nixpkgs have good support for cross-compilation, however, there are still some that can not be compiled in this way.

Cross-Compilation for Microchip Icicle Kit (RISCV64)

An SD image for the Microchip Icicle Kit can be cross-compiled from an x86 machine. To generate the release or debug an SD image run the following command:

$> nix build .#packages.riscv64-linux.microchip-icicle-kit-<release/debug>

Future Cross-Compilation Support

This will involve working with upstream package maintainers to ensure that the packages are cross-compilation aware. This will be addressed on a package-by-package basis.

binfmt Emulated Build

binfmt allows running different ISA on a development machine. This is achieved by running the target binary in an emulator such as QEMU or in a VM. So while not cross-compiled it can enable development for some embedded device configurations.

To enable binfmt, we recommend to set the following in your host systems configuration.nix:

boot.binfmt.emulatedSystems = [

"riscv64-linux"

"aarch64-linux"

];

In addition, it is recommended to enable KVM support with either

boot.kernelModules = [ "kvm-amd" ];

or

boot.kernelModules = [ "kvm-intel" ];

depending on whether your development host is running AMD or Intel processor.

Creating Application VM

Application VM (App VM) is a VM that improves trust in system components by isolating applications from the host OS and other applications. Virtualization with hardware-backed mechanisms provides better resource protection than traditional OS. This lets users use applications of different trust levels within the same system without compromising system security. While the VMs have overhead, it is acceptable as a result of improved security and usability that makes the application seem like it is running inside an ordinary OS.

As a result, both highly trusted applications and untrusted applications can be hosted in the same secure system when the concerns are separated in their own App VM.

To create an App VM, do the following:

-

Create the new configuration file for your VM in the modules/reference/appvms directory.

You can use an already existing VM file as a reference, for example:modules/reference/appvms/business.nix.Each VM has the following properties:

Property Type Unique Description Example name str yes This name is postfixed with -vmand will be shown in microvm list. The name, for example,chromium-vmwill be also the VM hostname. The length of the name must be 8 characters or less.“chromium” packages list of types.package no Packages to include in a VM. It is possible to make it empty or add several packages. [chromium top] macAddress str yes Needed for network configuration. "02:00:00:03:03:05" ramMb int, [1, …, host memory] no Memory in MB. 3072 cores int, [1, …, host cores] no Virtual CPU cores. -

Create a new option for your VM in modules/reference/appvms/default.nix. For example:

business-vm = lib.mkEnableOption "Enable the Business appvm";

new-vm = lib.mkEnableOption "Enable the New appvm"; # your new vm here

++ (lib.optionals cfg.business-vm [(import ./business.nix {inherit pkgs lib config;})])

++ (lib.optionals cfg.new-vm [(import ./new_vm_name.nix {inherit pkgs lib config;})]); # your new vm here

- Add your new VM to the profile file, for example mvp-user-trial.nix:

business-vm = true;

new-vm = true; # your new vm here

note

For more information on creating new profiles, see Profiles Configuration.

- Add an IP and the VM name in modules/common/networking/hosts.nix. For example:

{

ip = 105;

name = "business-vm";

}

-

Add an application launcher in modules/common/services/desktop.nix.

A launcher element has the following properties:

- name: the name of the launcher;

- path: path to the executable you want to run, like a graphical application;

- icon: an optional icon for the launcher. If not specified, the system will attempt to find an icon matching the

name. You can set this to the name of an icon you expect to be available in the current icon theme (currently "Papirus," defined inmodules/desktop/graphics/labwc.nix), or provide a full path to a specific icon file.

Hardware Configuration

All configuration files for reference target devices are in modules/hardware.

The ghaf-24.06 release supports the following target hardware:

- NVIDIA Jetson AGX Orin

- NVIDIA Jetson Orin NX

- Generic x86 (PC)

- Polarfire Icicle Kit

- Lenovo ThinkPad X1 Carbon Gen 11

- Lenovo ThinkPad X1 Carbon Gen 10

- NXP i.MX 8M Plus

To add a new hardware configuration file, do the following:

-

Create a separate folder for the device in modules/hardware.

-

Create the new configuration file with hardware-dependent parameters like host information, input and output device parameters, and others.

You can use an already existing file as a reference, for example modules/hardware/lenovo-x1/definitions/x1-gen11.nix.

Profiles Configuration

A profile is a set of software needed for a particular use case. All profiles configuration files are in modules/profiles.

To add a new profile, do the following:

- Create your own configuration file using modules/profiles/mvp-user-trial.nix as a reference.

- Depending on the location of your reference appvms, services, or programs change the includes to point to them.

- Create a new enable option to enable the profile, for example,

new-cool-profile. - In the lower section, under the correct area appvms, services, programs, make sure to describe additional definitions you need.

For example, a safe-and-unsave-browsing.nix file with a simple setup that includes business-vm and chrome-vm could look like this:

config = lib.mkIf cfg.enable {

ghaf = {

reference = {

appvms = {

enable = true;

chromium-vm = true;

business-vm = true;

};

services = {

enable = true;

};

programs = {

};

};

profiles = {

laptop-x86 = {

enable = true;

netvmExtraModules = [../reference/services];

guivmExtraModules = [../reference/programs];

inherit (config.ghaf.reference.appvms) enabled-app-vms;

};

};

};

};

labwc Desktop Environment

labwc is a configurable and lightweight wlroots-based Wayland-compatible desktop environment.

To use labwc as your default desktop environment, add it as a module to Ghaf:

- change the configuration option

profiles.graphics.compositor = "labwc"or - uncomment the corresponding line in the guivm.nix file.

The basis of the labwc configuration is the set of following files: rc.xml, menu.xml, autostart, and environment. These files can be edited by substituting in the labwc overlay overlays/custom-packages/labwc/default.nix.

Window Border Coloring

The border color concept illustrates the application trustworthiness in a user-friendly manner. The color shows the application's security level and allows avoiding user's mistakes. The same approach can be found in other projects, for example, QubeOS.

Ghaf uses patched labwc which makes it possible to change the border color for the chosen application. The implementation is based on window rules by substituting the server decoration colors (serverDecoration = yes). The borderColor property is responsible for the frame color.

important

According to the labwc specification, the identifier parameter is case-sensitive and relates to app_id for native Wayland windows and WM_CLASS for XWayland clients.

For example, the foot terminal with Aqua colored frame:

<windowRules>

<windowRule identifier="Foot" borderColor="#00FFFF" serverDecoration="yes" skipTaskbar="yes" />

<windowRule identifier="firefox" borderColor="#FF0000" serverDecoration="yes" skipTaskbar="yes" />

</windowRules>

COSMIC Desktop Environment

COSMIC is a modern, configurable, and lightweight Wayland desktop environment developed by System76. It is designed to be fast, efficient and user-friendly while maintaining a professional appearance. As of February 2025, COSMIC's latest release is Alpha 6, with a beta release planned in the coming months.

Enabling COSMIC in Ghaf

While COSMIC is not the default desktop environment in Ghaf, it can be enabled manually. To enable COSMIC DE in Ghaf, modify the graphics configuration as shown below:

profiles.graphics.compositor = "cosmic";

This configuration sets COSMIC as the active desktop environment for Ghaf.

Note: Starting from April 2025, COSMIC DE is fully integrated into NixOS options, and its tools are included in

nixpkgs. Prior to this, COSMIC had to be added to Ghaf from the external flake nixos-cosmic.

Configuration Components

COSMIC's configuration in Ghaf consists of several key components:

1. Core COSMIC DE Configuration

COSMIC handles its configuration via simple Rust Object Notation (RON) files located in the user's home directory under .config/cosmic.

In the Ghaf Nix configuration, however, we have introduced a conversion mechanism where the entire directory tree is represented by a single YAML file (cosmic.config.yaml). This YAML file acts as the system default COSMIC configuration and is applied to all fresh installations of Ghaf.

If the user makes manual changes to the configuration while using Ghaf, those changes will take precedence over the system defaults.

2. Ghaf COSMIC Nix Configuration

The cosmic.nix module in Ghaf customizes COSMIC to better align with the system's requirements. Below are the key modifications and adjustments made:

Disabled COSMIC Settings pages

- User Management: The

page-usersfeature in COSMIC settings is disabled. - Power Settings: The

page-powerfeature is removed, as power management is handled byswayidleandghaf-powercontrol. - Sound Settings: The

page-soundfeature is disabled, with audio control managed by a custom service.

Replacements and Overrides

- Icon Theme: The default COSMIC icon theme is changed to Papirus icon theme.

- GTK Settings: Some default GTK settings are applied to ensure a consistent look and feel.

- Session Management: COSMIC's session management integrates with

ghaf-session.targetfor better control of Ghaf services. - Power Management:

swayidlereplacescosmic-idleas the default idle and power manager, including configuration for automatic suspend and brightness adjustments. - Audio Control: A custom Ghaf audio control service works alongside the COSMIC audio applet to provide Ghaf-specific audio control features.

Additional Changes

- DBUS Proxy Integration: Custom DBUS proxy sockets are added for audio, network, and Bluetooth applets.

- Configuration Format: COSMIC's configuration is centralized into a single YAML file (

cosmic.config.yaml) for easier management and deployment. This configuration is installed as an explicit packageghaf-cosmic-config, the derivation of which can be found incosmic.nix. - Disabled Services: Several default services, such as

geoclue2,pipewire, andgnome-keyring, are explicitly disabled.

These changes ensure that COSMIC in Ghaf is tailored to the system's specific needs.

Configuration Files

The main configuration files are located in the modules/desktop/graphics/ directory:

modules/desktop/graphics/

├── cosmic-config/

│ ├── cosmic-config-to-yaml.sh # Helper script to convert `.config/cosmic` to a YAML config

│ └── cosmic.config.yaml # Main COSMIC desktop configuration which expands into `.config/cosmic`

└── cosmic.nix # Main COSMIC Nix configuration

Known Limitations

- COSMIC does not allow forcing Server-Side Decorations for apps running under COSMIC

- COSMIC does not yet support all common Wayland protocols (e.g. zwlr_virtual_pointer_manager_v1, etc.)

- COSMIC is still in relatively early development, with a Beta release planned some time in 2025

For more detailed information about COSMIC's architecture and features, visit the COSMIC Epoch repository and the System76 COSMIC Epoch homepage.

IDS VM Further Development

Implementation

The IDS VM is implemented as a regular Micro VM with static IP.

The mitmproxy is included in the demonstrative interactive proxy to enable analysis of TLS-protected data on the fly. Also, Snort network intrusion detection and prevention system package is included but no dedicated UI nor proper utilization is provided.

Enforcing network traffic to go through IDS VM is crucial to the IDS VM functionality. It is achieved by setting the IDS VM to be the gateway of other VMs in dnsmasq configuration of Net VM. There is a risk that one could change the gateway settings of the VM to bypass the IDS VM. This however requires root (sudo) rights and it is assumed here that these rights are enabled only in the debug build.

mitmproxy

mitmproxy is a free and open-source interactive HTTPS proxy. It is your Swiss Army Knife for debugging, testing, privacy measurements, and penetration testing. It can be used to intercept, inspect, modify and replay web traffic such as HTTP/1, HTTP/2, WebSockets, or any other SSL/TLS-protected protocols.

In IDS VM, we use mitmweb1 tool to demonstrate mitmproxy's capabilities. It provides a web-based user interface that allows interactive examination and modification of HTTP(s) traffic. The mtmproxy package also includes a console tool that provides the same functionalities in a text-based interface and a command-line tool mitmdump to view, record, and programmatically transform HTTP(s) traffic.

The mitmweb tool is run in ids-vm as a systemd service. It starts automatically when ids-vm boots up. The UI it provides is accessible at http://localhost:8081, so it is available from ids-vm only. However, with SSH port forwarding it is possible to access the UI from other VMs. To that purpose, GUI VM has a script mitmweb-ui that creates an SSH tunnel between ids-vm and chromium-vm, launches Chromium, and connects to the UI address.

Certificates

mitmproxy can decrypt encrypted traffic on the fly, as long as the client trusts mitmproxy's built-in certificate authority (CA). CA certificates are the same for all ids-vm instances, as they are hardcoded to the IDS VM implementation. In the release version, these should be randomly generated and stored securely.

By default, any of the clients should not trust mitmproxy's CA. These CA certificates should be installed in the OS's CA storage. However, many client applications (web browsers, for example) use their own CA bundles, and importing custom certificates there can be complicated or require manual user interaction. In our case, this difficulty is circumvented in chromium-vm by disabling certificate verification errors, if the certificate chain contains a certificate which SPKI fingerprint matches that of mitmproxy's CA certificate fingerprint. This does not degrade server verification security since mitmproxy validates upstream certificates using a certified Python package which provides Mozilla's CA Bundle.

Some applications use certificate pinning to prevent man-in-the-middle attacks. As a consequence mitmproxy's certificates will not be accepted by these applications without patching applications manually. Other option is to set mitmproxy to use ignore_hosts option to prevent mitmproxy from intercepting traffic to these specific domains.

-

mitmproxy is an interactive, SSL/TLS-capable intercepting proxy with a console interface for HTTP/1, HTTP/2, and WebSockets. mitmweb is a web-based interface for mitmproxy. mitmdump is the command-line version of mitmproxy. Source: mitmproxy docs. ↩

systemd Service Hardening

This document outlines systemd service configurations that significantly impact a service's exposure.

tip

For more information on troubleshooting common issues with systemd services, see Troubleshooting with systemd.

The following configurations can be utilized to enhance the security of a systemd service:

1. Networking

1.1. PrivateNetwork

PrivateNetwork is useful for preventing the service from accessing the network.

Type: Boolean.

Default: false

Options:

true: Creates a new network namespace for the service. Only the loopback device "lo" is available in this namespace, other network devices are not accessible.false: The service will use the host's network namespace, it can access all the network devices available on the host. It can communicate over the network like any other process running on a host.

1.2. IPAccounting

IPAccounting helps in detecting unusual or unexpected network activity by a service.

Type: Boolean.

Default: false

Options:

true: Enables accounting for all IPv4 and IPv6 sockets created by the service: keeps track of the data sent and received by each socket in the service.false: Disables tracking of the sockets created by the service.

1.3. IPAddressAllow, IPAddressDeny

IPAddressAllow=ADDRESS[/PREFIXLENGTH]…, IPAddressDeny=ADDRESS[/PREFIXLENGTH]…

Enables packet filtering on all IPv4 and IPv6 sockets created by the service. Useful for restricting/preventing a service from communicating only with certain IP addresses or networks.

Type: Space separated list of ip addresses and/or a symbolic name.

Default: All IP addresses are allowed and no IP addresses are explicitly denied.

Options:

- List of addresses: Specify list of addresses allowed/denied. For example,

['192.168.1.8' '192.168.1.0/24']. Any IP not explicitly allowed will be denied. - Symbolic Names: Following symbolic names can also be used.

any: Any host (i.e., '0.0.0.0/0 ::/0').

localhost: All addresses on the local loopback (i.e., '127.0.0.0/8 ::1/128').

link-local: All link-local IP addresses(i.e., '169.254.0.0/16 fe80::/64').

multicast: All IP multicasting addresses (i.e., 224.0.0.0/4 ff00::/8).

1.4. RestrictNetworkInterfaces

RestrictNetworkInterfaces is used to control which network interfaces a service has access to. This helps isolate services from the network or restrict them to specific network interfaces, enhancing security and reducing potential risk.

Type: Space-separated list of network interface names.

Default: The service can access to all available network interfaces unless other network restrictions are in place.

Options:

- Specify individual network interface names to restrict the service to using only those interfaces.

- Prefix an interface name with '~' to invert the restriction, i.e. denying access to that specific interface while allowing all others.

1.5. RestrictAddressFamilies

RestrictAddressFamilies is used to control which address families a service can use. This setting restricts the service's ability to open sockets using specific address families, such as 'AF_INET' for IPv4, 'AF_INET6' for IPv6, or others. It is a security feature that helps limit the service's network capabilities and reduces its exposure to network-related vulnerabilities.

Type: List of address family names.

Default: If not configured, the service is allowed to use all available address families.

Options:

none: Apply no restriction.- Specific Address Families: Specify one or more address families that the service is allowed to use, for example,

'AF_INET','AF_INET6','AF_UNIX'. - Inverted Restriction: Prepend character '~' to an address family name to deny access to it while allowing all others, for example,

'~AF_INET'would block IPv4 access.

Back to Top ⏫

2. File System

2.1 ProtectHome

ProtectHome is used to restrict a service's access to home directories. This security feature can be used either completely to block access to /home, /root, and /run/user or make them appear empty to the service, thereby protecting user data from unauthorized access by system services.

Type: Boolean or String.

Default: false i.e. the service has full access to home directories unless restricted by some other mean.

Options:

true: The service is completely denied access to home directories.false: The service has unrestricted access to home directories.read-only: The service can view the contents of home directories but cannot modify them.tmpfs: Mounts a temporary filesystem in place of home directories, ensuring the service cannot access or modify the actual user data. Adding the tmpfs option provides a flexible approach by creating a volatile in-memory filesystem where the service believes it has access to home but any changes it makes do not affect the actual data and are lost when the service stops. This is particularly useful for services that require a temporary space in a home.

2.2. ProtectSystem

ProtectSystem controls access to the system's root directory (/) and other essential system directories. This setting enhances security by restricting a service's ability to modify or access critical system files and directories.

Type: Boolean or String.

Default: full (Equivalent to true). The service is restricted from modifying or accessing critical system directories.

Options:

true: Mounts the directories/usr/,/boot, and/efiread-only for processes.full: Additionally mounts the/etc/directory read-only.strict: Mounts the entire file system hierarchy read-only, except for essential API file system subtrees like/dev/,/proc/, and/sys/.false: Allows the service unrestricted access to system directories.

Using true or full is recommended for services that do not require access to system directories to enhance security and stability.

2.3. ProtectProc

ProtectProc controls access to the /proc filesystem for a service. This setting enhances security by restricting a service's ability to view or manipulate processes and kernel information in the /proc directory.

Type: Boolean or String.

Default: default. No restriction is imposed from viewing or manipulating processes and kernel information in /proc.

Options:

noaccess: Restricts access to most process metadata of other users in/proc.invisible: Hides processes owned by other users from view in/proc.ptraceable: Hides processes that cannot be traced (ptrace()) by other processes.default: Imposes no restrictions on access or visibility to/proc.

2.4. ReadWritePaths, ReadOnlyPaths, InaccessiblePaths, ExecPaths, NoExecPaths

ReadWritePaths creates a new file system namespace for executed processes, enabling fine-grained control over file system access.

- ReadWritePaths=: Paths listed here are accessible with the same access modes from within the namespace as from outside it.

- ReadOnlyPaths=: Allows reading from listed paths only; write attempts are refused even if file access controls would otherwise permit it.

- InaccessiblePaths=: Makes listed paths and everything below them in the file system hierarchy inaccessible to processes within the namespace.

- NoExecPaths=: Prevents execution of files from listed paths, overriding usual file access controls. Nest

ExecPaths=withinNoExecPaths=to selectively allow execution within directories otherwise marked non-executable.

Type: Space-separated list of paths.

Default: No restriction to file system access until unless restricted by some other mechanism.

Options:

Space separated list of paths : Space-separated list of paths relative to the host's root directory. Symlinks are resolved relative to the root directory specified by RootDirectory= or RootImage=.